After 15 years preventing drift, debt, and silos in design organizations, here’s what I believe you need to know before your team uses AI at scale.

The High-Stakes Reality A Lot Of AI Content Ignores

The question isn’t whether AI makes design faster; it’s whether AI makes design faster while preserving the user advocacy and design thinking that creates products people actually need.

Much of today’s AI+UX discourse celebrates “vibe coding”: generate fast, ship messy, iterate later. This works for some contexts (pre-product-market fit startups, low-stakes experiments, small teams that can course-correct instantly). But not all design happens in low-stakes contexts.

When you’re serving millions of users, managing regulated financial products, or building infrastructure that cities depend on, iteration has consequences:

- A bad release affects hundreds of thousands of users

- Design drift creates costly rework

- Inconsistency erodes trust

- Accessibility failures become legal liability

AI is not a replacement for design operations. AI is a stress test for your design operations. If your team struggles with drift, debt, or silos now, AI will accelerate those failure modes. But if you have strong operational scaffolding, AI can strengthen your human-centered design practice.

The challenge: current AI+UX research focuses heavily on what’s possible (individual copilots, AI-powered design systems, agentic workflows) but rarely addresses what’s appropriate for different organizational contexts, team maturity levels, or risk profiles.

Why DesignOps Matters More in AI-Native Orgs (Not Less)

I’ve seen this pattern throughout my career, not just with design tools but across every major technology shift:

The arrival of the iPhone (2007): Companies scrambled to build mobile apps. Those without mobile design standards shipped fragmented experiences: different UI patterns on iOS vs. Android vs. web, different features on each platform.

The disruption and disappearance of Flash (2010-2017): The shift from Flash to HTML5 forced wholesale redesigns. Teams without modular design systems had to rebuild from scratch. Those with systems could migrate incrementally.

These transitions show similar dynamics, though each context differs. The organizational pattern remains consistent:

- New capability arrives (creates excitement)

- Early adopters experiment (creates hype)

- Organizational structures lag (creates chaos)

- Gap creates expensive failures (rework, quality issues, team churn)

- Mature orgs retrofit operations to stabilize (slow, painful)

More recently, I’ve seen this with Figma’s real-time collaboration (distributed teams needed new workflow norms), design systems maturation (teams needed to balance consistency with autonomy), and remote work becoming standard (leaders needed explicit communication frameworks).

AI is following similar dynamics, but faster and with higher stakes.

The Amplification Effect

If one designer can now generate 10 variants in an hour (vs. 2-3 before) and your QA process was already a bottleneck, you now have 5x the review backlog.

If your design system has gaps, AI will exploit them, generating designs that look on-brand but violate accessibility standards or interaction patterns.

If your teams are siloed, AI gives each silo superpowers, accelerating divergence.

My position: AI doesn’t make DesignOps obsolete. It makes weak DesignOps extremely costly, particularly in high-stakes environments.

What Current Research Gets Right (And Where the Gaps Are)

Recent research from Nielsen Norman Group’s State of UX 2026 (January 2026), Salesforce on human-AI collaboration, Deloitte’s AI-native organization research (2025), and academic sources on human-AI co-ideation describes four emerging operating models for AI in design teams:

- Individual copilot workflows (AI as assistant)

- AI-powered design systems (AI generates within constraints)

- Agentic UX factory (AI as delegated team member)

- Design Intelligence Ops (cross-functional AI governance)

These models map well to NN/g’s DesignOps framework: how we work together, how we get work done, how our work creates impact.

But a lot of the research has some gaps. It describes what’s possible, not when to do it, for whom, or at what organizational cost.

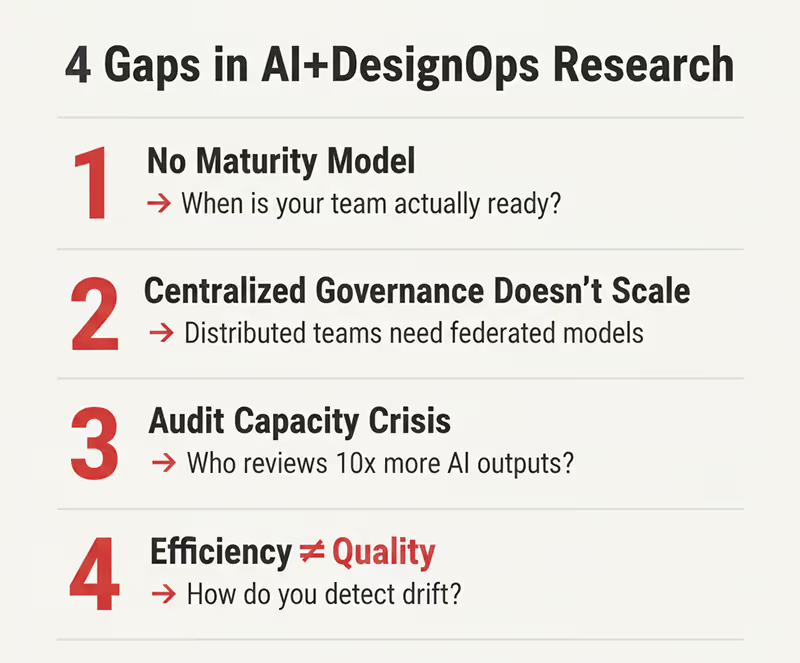

Based on my years scaling design operations (and witnessing the failure modes when teams move too fast or too slow), here are the four gaps that I believe will hurt you if you ignore them. These examples represent successful implementations; teams with similar approaches may face different outcomes based on market conditions, funding, or organizational culture.

Four Critical Gaps in Current AI+DesignOps Research

Gap 1: No Maturity Model for AI Adoption

What research says: AI tools and workflows are available for design teams to adopt, from simple copilots to complex agentic systems.

What’s missing: Readiness assessment. When is your team actually ready for each model? What foundations must exist first?

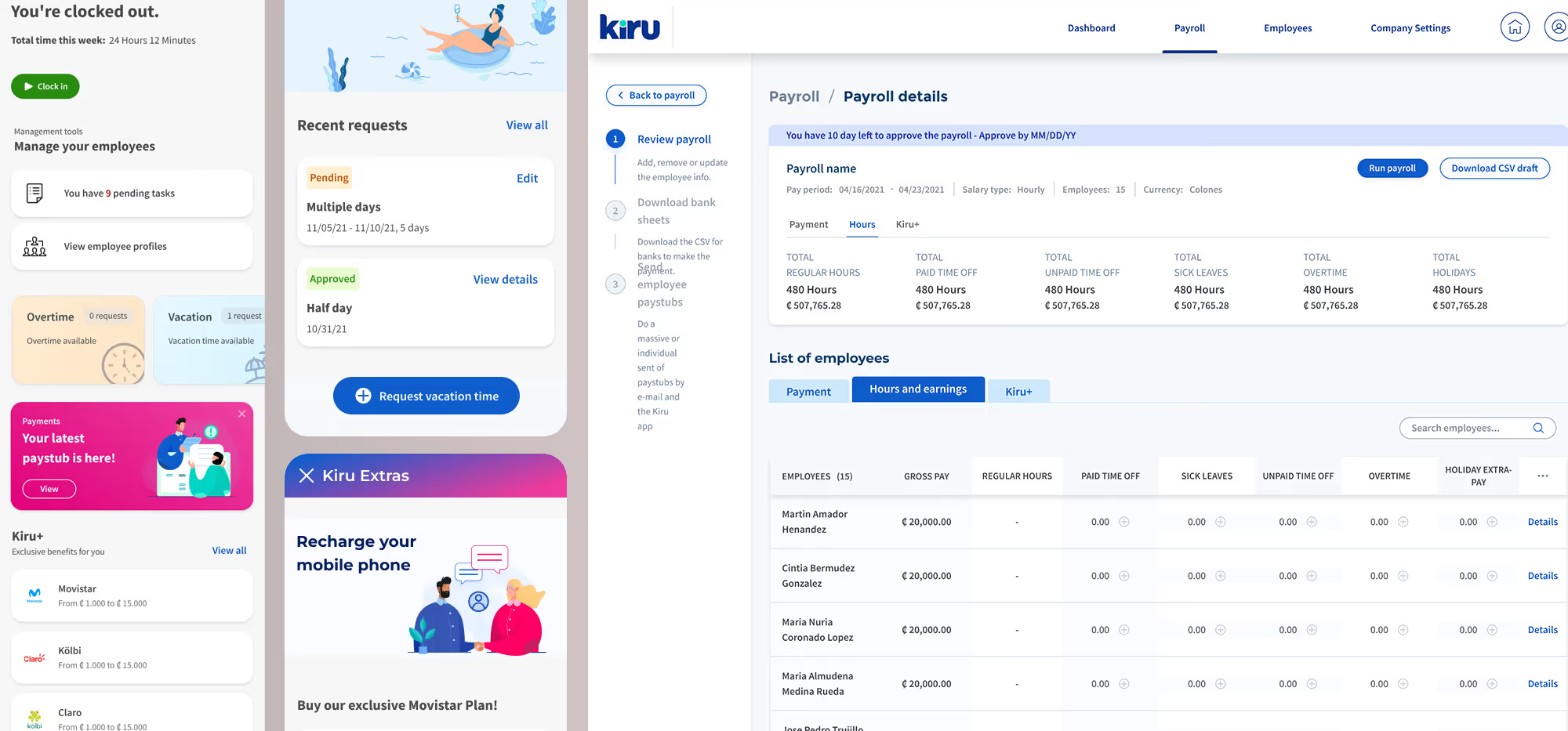

Pattern from experience: At KIRU, I joined as first design hire (employee #7) for a LATAM payroll startup. We could have iterated (vibed) our way to our initial growth, as many startups do. Instead, we implemented operational infrastructure early: at roughly 1,000 users and 100 corporate clients, we deployed Monday.com for customer feedback triage and Pendo for in-app analytics. This early investment was associated with our ability to scale to 9,000 users and 400 clients with NPS of 80 and CSAT of 83%. World-class scores. We weren’t just moving fast; we were learning fast because we’d built the feedback loops to do so.

Operational recommendation: Assess process maturity before adopting AI. Maturity isn’t about team size; it’s about operational discipline. A 50-person team with weak processes is less ready than a 10-person team with strong ones.

Key question: Do you have documented design workflows, a mature design system, and quality metrics? If no, fix those foundations before adding AI. The time to build operational infrastructure is BEFORE you need it desperately.

Gap 2: Governance Models Assume Centralized Control

What research says: Deloitte’s AI-native organization research recommends extending existing governance frameworks to cover AI, treating prompts, policies, and evaluation criteria as managed assets.

What’s missing: How does this work for distributed teams? Embedded designers in product teams can’t wait for centralized “AI approvals” without creating bottlenecks. Additionally, who’s responsible for auditing AI outputs for quality, brand consistency, and potential bias?

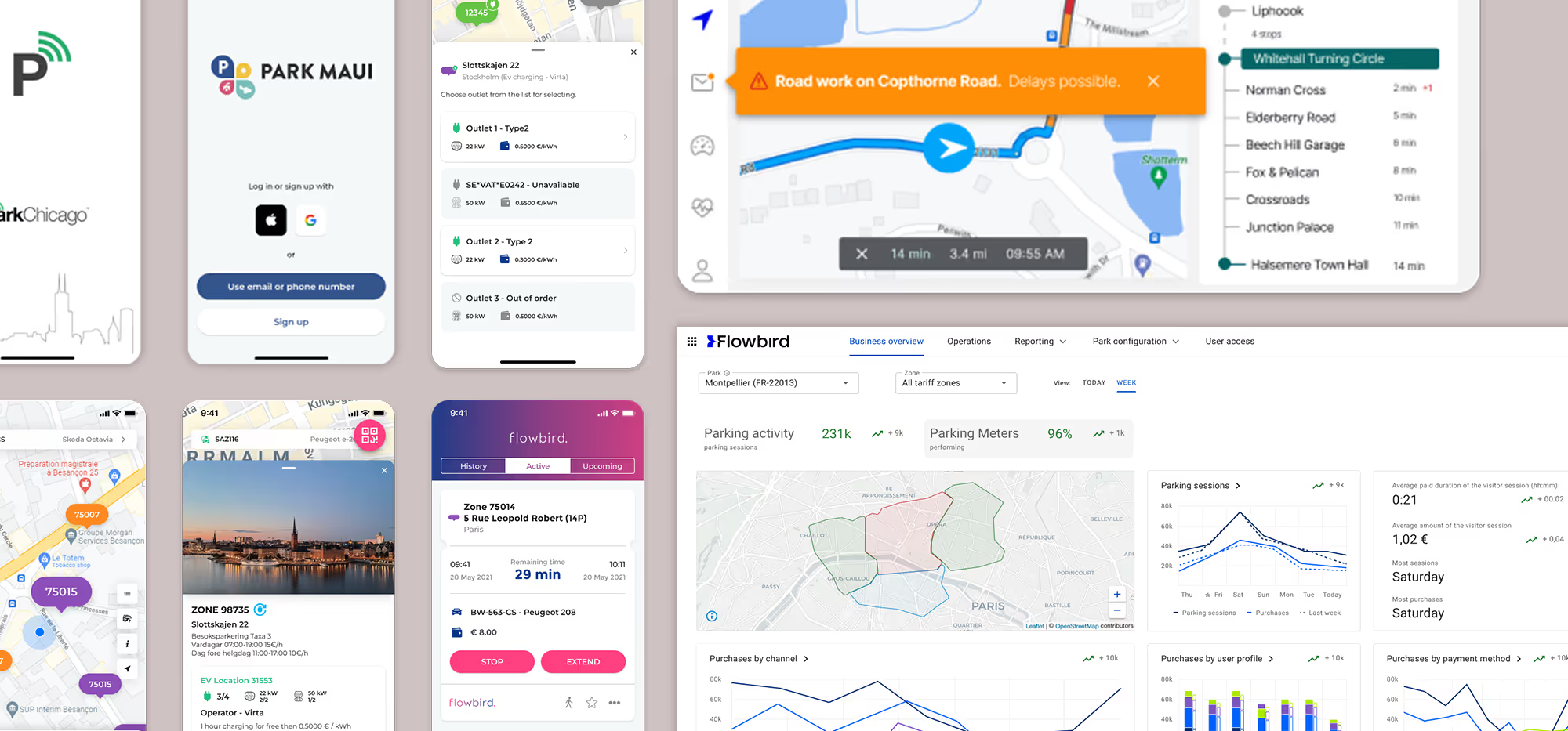

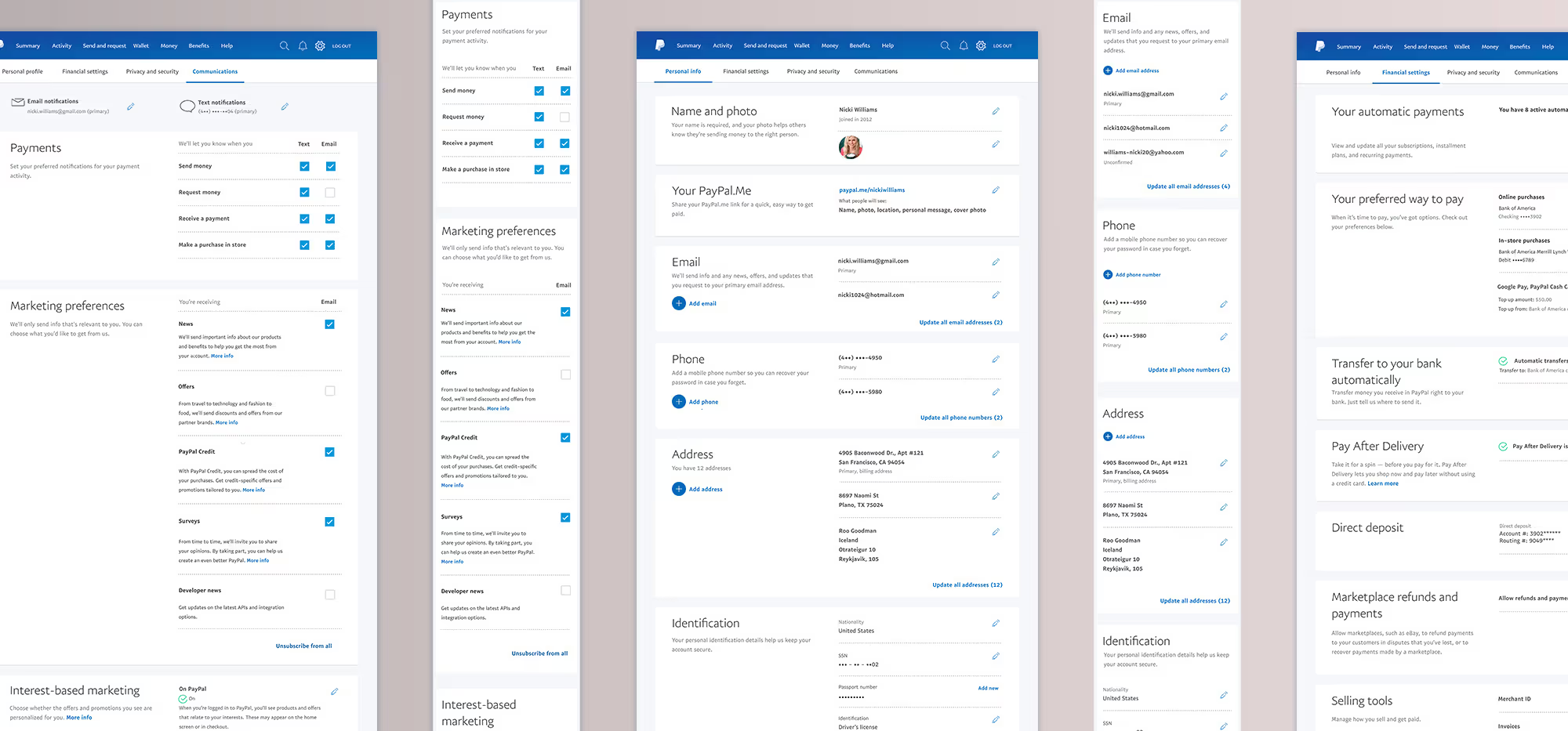

Pattern from experience: At PayPal, leading design for 10M+ users across 15 international markets, GDPR compliance required distributed decision-making with centralized guardrails (not top-down control). At Flowbird, managing 10+ white-label parking apps, we needed centralized brand DNA (Flowbird UX standards, accessibility requirements) with local customization (city-specific payment rules, branding). This required federated governance: a central design system team provided components and standards; city teams used them within guardrails.

Operational recommendation: Work with stakeholders to implement federated governance for AI. A center of excellence defines AI usage policies, evaluation standards, and design system rules. Product teams execute within guardrails and escalate edge cases. This prevents both chaos (everyone experimenting independently) and bottlenecks (everything requires central approval).

Key question: Can embedded designers use AI tools without creating brand inconsistency? Who owns the “AI design standards”: DesignOps, each pod or squad, or the ML team? Who reviews outputs for quality and fairness?

Gap 3: “Audit Interfaces” Without Audit Capacity

What research says: Jakob Nielsen’s 2026 predictions (January 2026) identify audit interfaces as a critical UX challenge: how to summarize long AI reasoning chains into glanceable, high-signal review surfaces for human oversight. He warns of “review fatigue,” where humans approve agent actions without true oversight because the friction of auditing outweighs the time saved.

What’s missing: Who does the auditing? When does a team of 8 designers have capacity to review AI outputs? If AI generates 10x more variants, do you need 10x more reviewers?

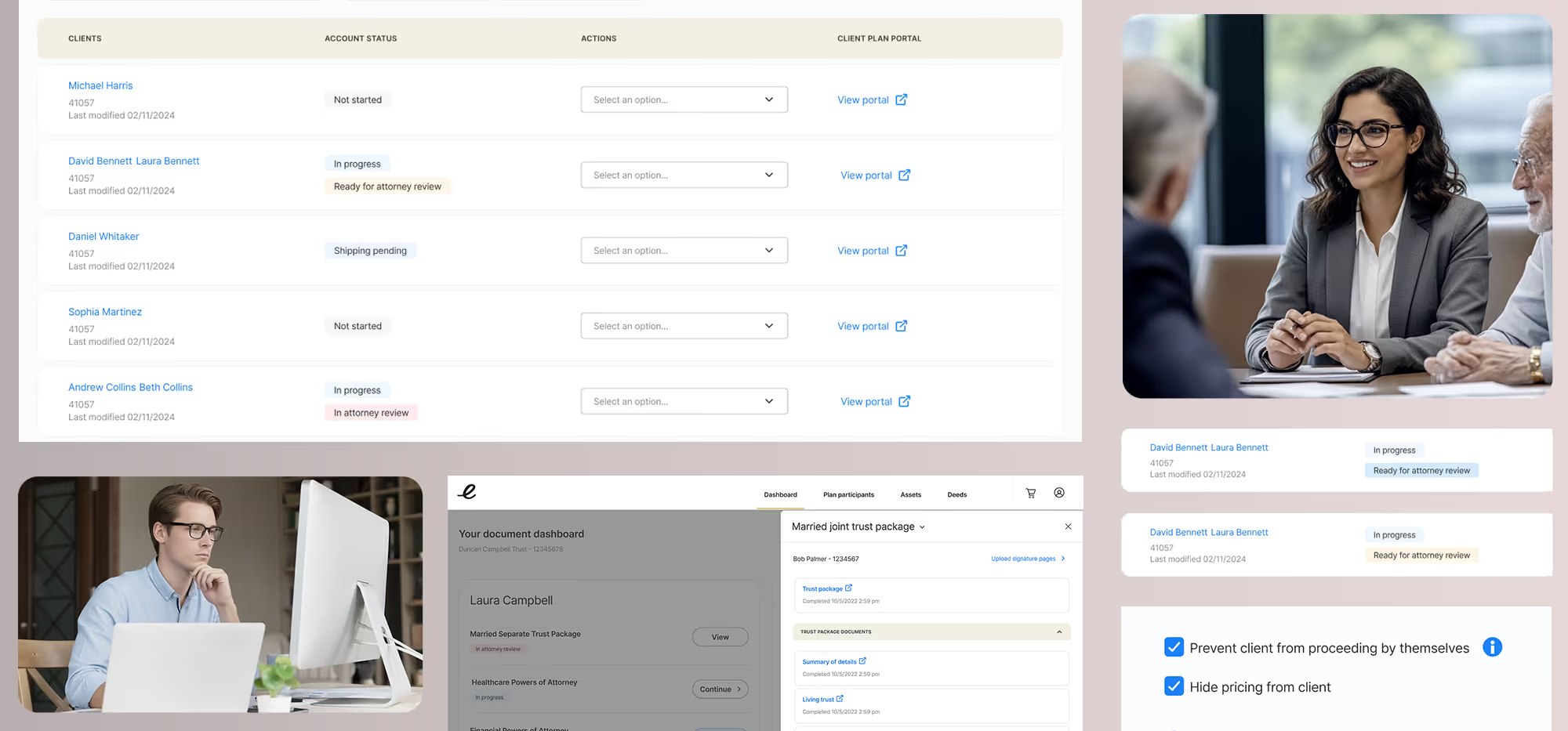

Pattern from experience: At Flowbird, reducing design cycle time from 4 weeks to 2 weeks (~45% reduction) didn’t come from faster production; it came from eliminating review bottlenecks through a scrum process, standardized handoff and QA workflows. At Estate Guru, 50% reduction in user errors was associated with a UX audit and upfront constraints (design system standards, validation rules) rather than post-facto fixing.

Operational recommendation: Build quality in via constraints; don’t inspect it in afterward. Use design system tokens to constrain AI generation. Implement automated accessibility checks. Define behavioral contracts that specify what AI can do autonomously vs. what requires human review.

Key question: At what point does “review everything” become a bottleneck that negates AI speed benefits? Better to have strong guardrails with spot-checking than weak guardrails with exhaustive review.

Gap 4: ROI Measurement Beyond Efficiency

What research says: Most AI+UX discourse focuses on efficiency metrics: time saved, variants generated, cycle time reduction.

What’s missing: Quality metrics. Does AI maintain design quality at scale? How do you detect when AI-generated designs are degrading user experience? How do you measure whether AI-generated experiences serve all users equitably?

Pattern from experience: Working with Euvic on CapAssure (AlterDomus’s waterfall calculations and carried interest allocations platform), we observed 45% user satisfaction improvement and 25% time-on-task reduction. This wasn’t about speed; it was about optimizing for expert users’ mental models in a highly regulated environment. At PayPal, I led the Unified Card System redesign, which addressed feature drift from team silos: multiple teams had built card management features independently, creating 4 different “add a card” flows with inconsistent UI. The fix required operational coordination, not just visual polish. Outcome: 40% increase in feature adoption, 20% reduction in customer service calls.

Operational recommendation: Track drift metrics alongside efficiency metrics. Design system adherence rate (percentage of UI using approved components vs. custom), cross-product consistency scores (do similar features behave similarly?), and rework rate (how often do we redesign because earlier work didn’t follow standards?). For organizations serving diverse user populations, track performance metrics across demographic groups to ensure AI-generated experiences work well for everyone. These are leading indicators that predict when AI-generated designs are accumulating debt or creating equity issues.

Key question: Are your quality metrics robust enough to detect when AI speeds up production but degrades outcomes? Efficiency without quality is just faster failure.

The Vibe Coding Question: When Speed Trumps Systems (And When It Doesn’t)

Much of the current AI+UX discourse assumes “vibe coding” philosophy: generate fast, ship messy, iterate later. AI makes iteration cheap, so why plan?

This works, until it doesn’t.

When Vibe Coding Works

- Pre-product-market fit: You don’t know what you’re building yet. Speed of learning trumps quality of output.

- Low-stakes contexts: Internal tools, marketing experiments, throwaway prototypes.

- Small, co-located teams: 3-5 people in one room can course-correct instantly. No formal process needed.

- High designer judgment: If your team has deep craft and strong shared taste, they can “vibe” their way to quality.

- Strong design culture: When values and standards are deeply internalized, teams can experiment without formal governance.

When Vibe Coding Fails

- Scale: 10+ designers across distributed teams can’t “vibe” together. Drift is inevitable without systems.

- Regulated environments: You can’t iterate your way out of GDPR violations or SEC compliance issues.

- High-stakes products: NYC parking app with 850K MAU. One bad release affects hundreds of thousands of users.

- Design debt accumulation: Fast iteration without cleanup creates compounding technical debt. AI accelerates this.

The Hybrid Model

The answer isn’t “always plan” or “always vibe”; it’s context-dependent governance.

My design philosophy is human-centered, user-advocate, purpose-driven. I’m interested in creating products that serve real utility. Vibe coding optimizes for speed of shipping. Human-centered design optimizes for correctness of solution. Sometimes these align (early exploration). Often they conflict (high-stakes execution). Leaders must know which context they’re in.

My position: Most AI+UX content assumes everyone is in low-stakes, experimental contexts. My experience is with organizations where speed without systems creates significant risk, and where AI must strengthen (not erode) human-centered design practice.

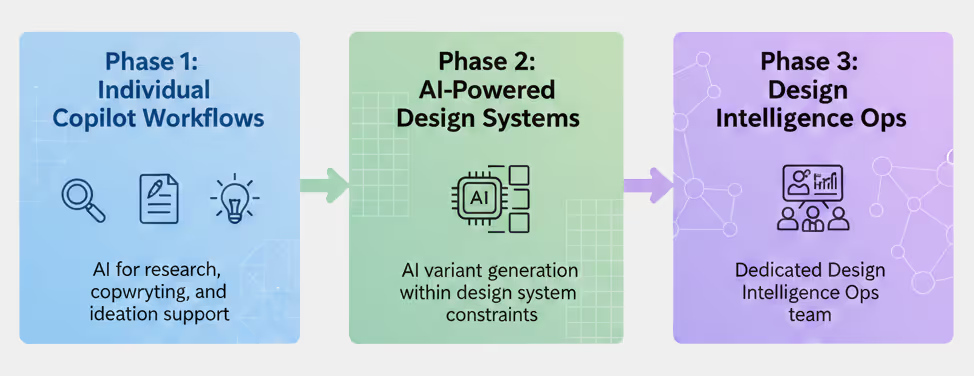

A Maturity-Based Implementation Framework

Phase 1: Individual Copilot Workflows

Prerequisites: - Documented design process - Basic design system or UI kit - Quality definition (even if informal)

What to implement: - AI for research synthesis (interview transcripts → themes) - AI for copywriting (product descriptions, microcopy) - AI for ideation support (generate concepts, not final designs)

Governance: - Personal use policies: Allowed tools, prohibited uses (e.g., no uploading sensitive user data to public AI services) - Basic training: How to write effective prompts, how to evaluate outputs

Timeline: 2-4 months

Metrics to track: Time saved on mechanical tasks, designer satisfaction with tools, and critically, time reinvested in user research and strategic thinking (not just efficiency gains).

Phase 2: AI-Powered Design Systems

Prerequisites: - Mature design system (200+ components, documented tokens) - DesignOps capacity (at least one person focused on systems) - Established QA process

What to implement: - AI variant generation within design system constraints - Automated accessibility checks - Token-based brand consistency enforcement

Governance: - Federated model: Center of excellence defines AI policies and provides training. Product squads execute within guardrails and escalate edge cases. - Design system constraints become AI constraints (if the design system doesn’t allow this pattern, AI can’t generate it)

Timeline: 4-6 months (design system hardening + AI integration)

Metrics to track: Design system adherence rate, brand consistency across AI-generated variants, cycle time (should decrease without quality degradation), accessibility compliance rates.

Phase 3: Design Intelligence Ops

Prerequisites: - Executive sponsorship - Cross-functional buy-in (Product, Engineering, Data/ML) - Dedicated budget for team and tools

What to implement: - Dedicated Design Intelligence Ops team (UX researcher + DesignOps lead + ML partner) - Integrated governance (design quality + ML evaluation) - Audit interfaces for agentic workflows - Continuous monitoring for drift, quality degradation, and equity issues - Post-deployment monitoring with incident response and rollback mechanisms - Technical orchestration layer for API-driven integration and legacy system compatibility

Why this requires maturity: Design Intelligence Ops is essentially an anti-silo mechanism at enterprise scale. You can’t build it if your foundation is shaky.

Timeline: 6-12 months with clear quarterly milestones

Metrics to track: Outcome metrics (activation, retention, user satisfaction across demographic segments), operational metrics (experiment velocity, design QA issues, bias audit results), team health (retention, psychological safety, skill development).

Note on technical complexity: 60%+ of leaders cite legacy system integration and technical orchestration as primary bottlenecks. Plan for API-driven integration between AI tools, design systems, and existing workflows. This is infrastructure work, not just design work.

What This Looks Like: Three Scenarios

Scenario A: Early-Stage Startup

- Context: Low maturity, high speed pressure, pre-PMF experimentation

- Do: Standardize design process first, then add individual copilot tools for research and copywriting

- Don’t: Build AI infrastructure before you’ve built feedback loops

- Timeline: 1-3 months to process baseline, then 2-3 months AI pilot

Scenario B: Scale-Up with Distributed Teams

- Context: Medium maturity, white-label complexity, distributed team coordination

- Do: Implement federated governance, harden design system, then integrate AI at system level

- Don’t: Let every team experiment independently without coordination

- Timeline: 2-4 months design system hardening, 3+ months AI integration

Scenario C: Enterprise with Potential Silos

- Context: High maturity potential, risk of drift from team independence

- Do: Stand up Design Intelligence Ops to prevent drift, upskill DesignOps team on AI governance

- Don’t: Assume current DesignOps can absorb AI governance responsibilities without new skills

- Timeline: Quarterly milestones

Measuring Success: Beyond Efficiency

Track three categories of metrics:

Efficiency (necessary but insufficient): - Time saved per designer - Cycle time reduction - Variants generated per sprint

Quality (the real test): - User satisfaction scores - Task completion rates - Error rates - Accessibility compliance

Drift prevention (the innovation metric): - Design system adherence rate (% of UI using approved components) - Cross-product consistency score (do similar features behave similarly?) - Rework rate (how often do we redesign because earlier work didn’t follow standards?)

Why drift metrics matter: At PayPal, we didn’t have formal drift metrics, but we saw the symptoms in the Unified Card System project (4 different “add card” flows, inconsistent UI patterns across products). These were drift made visible. With AI, drift can be hidden. Each AI-generated design might look fine individually, but collectively they diverge from standards. You need leading indicators to catch this early.

Competing Perspectives

Some organizations will succeed with bottom-up, unstructured AI adoption, particularly those with strong design culture, deeply internalized values, and high individual judgment.

Some teams should wait. If you’re still figuring out basic design workflows or what “good design” means in your context, AI will amplify confusion rather than clarity.

Some leaders believe AI will replace DesignOps. I believe the opposite: AI makes DesignOps more critical. The more autonomous and powerful the tools, the more important the operational scaffolding that ensures they’re used well.

There’s also risk in over-engineering frameworks. Not every team needs formal governance; rigid compliance can stifle the rapid learning loops AI enables. The key is matching structure to stakes and maturity.

The risk: If you misjudge your readiness, you accelerate toward failure rather than success.

What This Framework Doesn’t Address

This framework doesn’t address:

- Highly regulated industries in depth (healthcare, pharma, financial services with strict regulatory oversight beyond GDPR)

- Organizations without baseline design thinking capability (if your org doesn’t value user research or design rigor, start there; AI won’t fix that)

- Global and cultural considerations (data sovereignty requirements, cultural differences in AI adoption, non-Western design practices, emerging market contexts where resource constraints differ fundamentally from enterprise environments)

- Non-product design contexts (service design, brand design, physical product design have different dynamics)

- Physical AI and multimodal continuity (emerging 2026+ trends that complicate standard digital DesignOps)

What Leaders Should Ask

Not “Should we use AI in design?” (You will, whether you plan it or not; your designers are already using AI tools.)

The question is: “Do we have the operational scaffolding to use AI without destroying what makes our products human-centered?”

If no: Build scaffolding first. Use the maturity assessment. Fix the foundations.

If yes: Use this framework. Start with individual copilots, scale to AI-powered design systems, evolve to Design Intelligence Ops as maturity allows.

Because years of scaling design operations has taught me this: Tools change. Speed increases. But the fundamentals (user advocacy, design thinking, operational discipline) remain. AI is just the latest test of whether you’ve built those foundations well.

The companies that recognize this pattern and build operational scaffolding now will scale AI successfully. Those that chase speed without structure will retrofit under pressure or fail trying.